TIL that OpenAI charges for API usage of their language models in a unit called “tokens”. Depending on which of their models you use (GPT4, GPT3.5, etc) the price per token changes.

Now there’s no direct translation of token into characters, but their tokenizer is a simple tool that can give you an idea how many tokens a given text is in GPT3. (They don’t seem to have one for GPT4.)

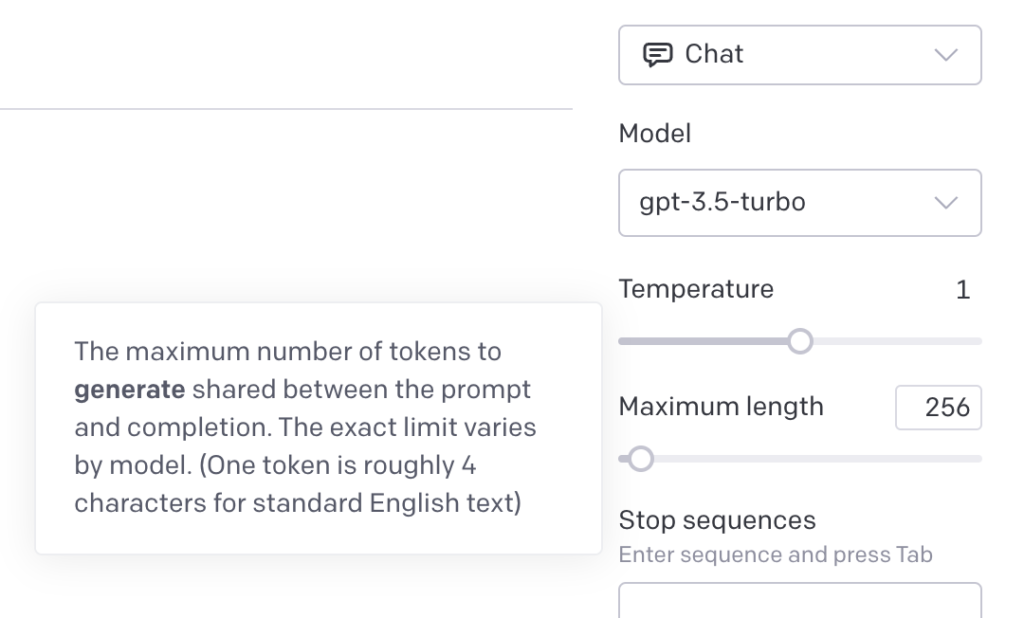

If you play around with OpenAI’s Playground you can set how many Tokens you allow for both the input and output. The input is what you enter into OpenAI, and the output being what OpenAI responds with. That’s the “Maximum length” setting, and by default it’s set to 256 tokens.

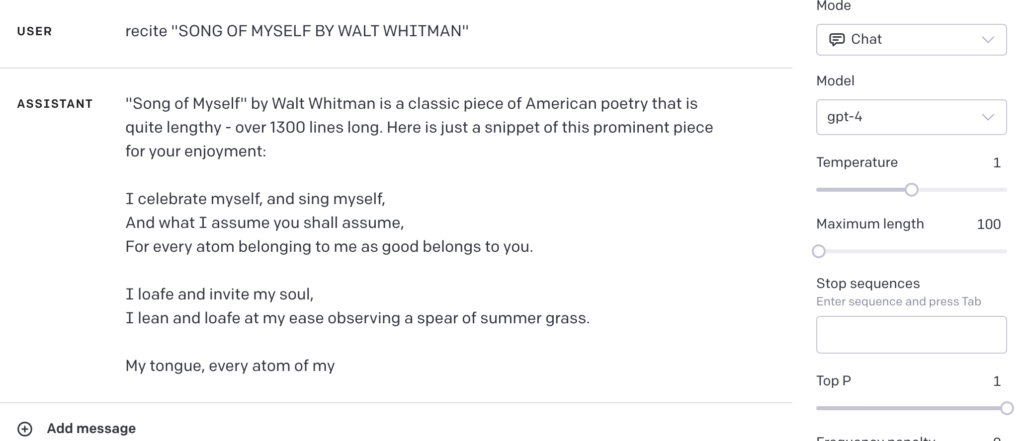

Here you can see an example of a 100 token setting. This includes both the input (recite “SONG OF MYSELF BY WALT WHITMAN”) and the output (the text that “Assistant” wrote, ending with “My tongue, every atom of my“.

If you’re looking for a good explanation of what tokens in OpenAI are, this is actually a good walkthrough:

Leave a Reply